There have recently been some discussions on the ongoing role of L2s in the Ethereum ecosystem, especially in the face of two facts:

* L2s' progress to stage 2 (and, secondarily, on interop) has been far slower and more difficult than originally expected

* L1 itself is scaling, fees are very low, and gaslimits are projected to increase greatly in 2026

Both of these facts, for their own separate reasons, mean that the original vision of L2s and their role in Ethereum no longer makes sense, and we need a new path.

First, let us recap the original vision. Ethereum needs to scale. The definition of "Ethereum scaling" is the existence of large quantities of block space that is backed by the full faith and credit of Ethereum - that is, block space where, if you do things (including with ETH) inside that block space, your activities are guaranteed to be valid, uncensored, unreverted, untouched, as long as Ethereum itself functions. If you create a 10000 TPS EVM where its connection to L1 is mediated by a multisig bridge, then you are not scaling Ethereum.

This vision no longer makes sense. L1 does not need L2s to be "branded shards", because L1 is itself scaling. And L2s are not able or willing to satisfy the properties that a true "branded shard" would require. I've even seen at least one explicitly saying that they may never want to go beyond stage 1, not just for technical reasons around ZK-EVM safety, but also because their customers' regulatory needs require them to have ultimate control. This may be doing the right thing for your customers. But it should be obvious that if you are doing this, then you are not "scaling Ethereum" in the sense meant by the rollup-centric roadmap. But that's fine! it's fine because Ethereum itself is now scaling directly on L1, with large planned increases to its gas limit this year and the years ahead.

We should stop thinking about L2s as literally being "branded shards" of Ethereum, with the social status and responsibilities that this entails. Instead, we can think of L2s as being a full spectrum, which includes both chains backed by the full faith and credit of Ethereum with various unique properties (eg. not just EVM), as well as a whole array of options at different levels of connection to Ethereum, that each person (or bot) is free to care about or not care about depending on their needs.

What would I do today if I were an L2?

* Identify a value add other than "scaling". Examples: (i) non-EVM specialized features/VMs around privacy, (ii) efficiency specialized around a particular application, (iii) truly extreme levels of scaling that even a greatly expanded L1 will not do, (iv) a totally different design for non-financial applications, eg. social, identity, AI, (v) ultra-low-latency and other sequencing properties, (vi) maybe built-in oracles or decentralized dispute resolution or other "non-computationally-verifiable" features

* Be stage 1 at the minimum (otherwise you really are just a separate L1 with a bridge, and you should just call yourself that) if you're doing things with ETH or other ethereum-issued assets

* Support maximum interoperability with Ethereum, though this will differ for each one (eg. what if you're not EVM, or even not financial?)

From Ethereum's side, over the past few months I've become more convinced of the value of the native rollup precompile, particuarly once we have enshrined ZK-EVM proofs that we need anyway to scale L1. This is a precompile that verifies a ZK-EVM proof, and it's "part of Ethereum", so (i) it auto-upgrades along with Ethereum, and (ii) if the precompile has a bug, Ethereum will hard-fork to fix the bug.

The native rollup precompile would make full, security-council-free, EVM verification accessible. We should spend much more time working out how to design it in such a way that if your L2 is "EVM plus other stuff", then the native rollup precompile would verify the EVM, and you only have to bring your own prover for the "other stuff" (eg. Stylus). This might involve a canonical way of exposing a lookup table between contract call inputs and outputs, and letting you provide your own values to the lookup table (that you would prove separately).

This would make it easy to have safe, strong, trustless interoperability with Ethereum. It also enables synchronous composability (see: https://t.co/9jy6v1X6Fw and https://t.co/gZmu3YjebM ). And from there, it's each L2's choice exactly what they want to build. Don't just "extend L1", figure out something new to add.

This of course means that some will add things that are trust-dependent, or backdoored, or otherwise insecure; this is unavoidable in a permissionless ecosystem where developers have freedom. Our job should make to make it clear to users what guarantees they have, and to build up the strongest Ethereum that we can.

I actually don't think it's complicated.

IMO the future of onchain mechanism design is mostly going to fit into one pattern:

[something that looks like a prediction market] -> [something that looks like a capture-resistant, non-financialized preference-setting gadget]

In other words:

* One layer that is maximally open and maximizes accountability (it's a market, anyone can buy and sell, if you make good decisions you win money if you make bad decisions you lose money)

* One layer that is decentralized and pluralistic, and that maximizes space for intrinsic motivation. This cannot be token-based, because token owners are not pluralistic, and anyone can buy in and get 51% of them. Votes here should be anonymous, ideally MACI'd to reduce risk of collusion.

The prediction market is the correct way to do a "decentralized executive", because the most logical primitive for "accountability" in a permissionless concept is exactly that.

Though sometimes you will want to keep it simple, and do a centralized executive at that layer instead:

[replaceable centralized executive] -> [something that looks like a capture-resistant, non-financialized preference-setting gadget]

Thinking in these two layers explicitly: (i) what is doing your execution, (ii) what is doing your preference-setting and is judging the executor(s), is best.

How I would do creator coins

We've seen about 10 years of people trying to do content incentivization in crypto, from early-stage platforms like Bihu and Steemit, to BitClout in 2021, to Zora, to tipping features inside of decentralized social, and more. So far, I think we have not been very successful, and I think this is because the problem is fundamentally hard.

First, my view of what the problem is. A major difference between doing "creator incentives" in the 00s vs doing them today, is that in the 00s, a primary problem was having not enough content at all. In the 20s, there's plenty of content, AI can generate an entire metaverse full of it for like $10. The problem is quality. And so your goal is not *incentivizing content*, it's *surfacing good content*.

Personally, I think that the most successful example of creator incentives we've seen is Substack. To see why, take a look at the top 10:

https://t.co/duaCaGNYXp

https://t.co/y1F9Td0Y52

https://t.co/xEMt8pIK74

Now, you may disagree with many of these authors. But I have no doubt that:

1. They are on the whole high quality, and contribute positively to the discussion

2. They are mostly people who would not have been elevated without Substack's presence

So Substack is genuinely surfacing high quality and pluralism.

Now, we can compare to creator coin projects. I don't want to pick on a single one, because I think there's a failure mode of the entire category.

For example:

Top Zora creator coins: https://t.co/238cqf2bX1

BitClout: https://t.co/0jVmotkpFw

Basically, the top 10 are people who already have very high social status, and who are often impressive but primarily for reasons other than the content they create.

At the core, Substack is a simple subscription service: you pay $N per month, and you get to see the person's articles. But a big part of Substack's success is that they did not just set the mechanism and forget. Their launch process was very hands-on, deliberately seeding the platform with high-quality creators, based on a very particular vision of what kind of high-quality intellectual environment they wanted to foster, including giving selected people revenue guarantees.

So now, let's get to one idea that I think could work (of course, coming up with new ideas is inherently a more speculative project than criticizing existing ones, and more prone to error).

Create a DAO, that is *not* token-based. Instead, the inspiration should be Protocol Guild: there are N members, and they can (anonymously) vote new members in and out. If N gets above ~200, consider auto-splitting it.

Importantly, do _not_ try to make the DAO universal or even industry-wide. Instead, embrace the opinionatedness. Be okay with having a dominant type of content (long-form writing, music, short-form video, long-form video, fiction, educational...), and be okay with having a dominant style (eg. country or region of origin, political viewpoint, if within crypto which projects you're most friendly to...). Hand-pick the initial membership set, in order to maximize its alignment with the desired style.

The goal is to have a group that is larger than one creator and can accumulate a public brand and collectively bargain to seek revenue opportunities, but at the same time small enough that internal governance is tractable.

Now, here is where the tokens come in. In general, one of my hypotheses this decade is that a large portion of effective governance mechanisms will all have the form factor of "large number of people and bots participating in a prediction market, with the output oracle being a diverse set of people optimized for mission alignment and capture resistance". In this case, what we do is: anyone can become a creator and create a creator coin, and then, if they get admitted to a creator DAO, a portion of their proceeds from the DAO are used to burn their creator coins.

This way, the token speculators are NOT participating in a recursive-speculation attention game backed only by itself. Instead, they are specifically being predictors of what new creators the high-value creator DAOs will be willing to accept. At the same time, they also provide a valuable service to the creator DAOs: they are helping surface promising creators for the DAOs to choose from.

So the ultimate decider of who rises and falls is not speculators, but high-value content creators (we make the assumption that good creators are also good judges of quality, which seems often true). Individual speculators can stay in the game and thrive to the extent that they do a good job of predicting the creator DAOs' actions.

In these five years, the Ethereum Foundation is entering a period of mild austerity, in order to be able to simultaneously meet two goals:

1. Deliver on an aggressive roadmap that ensures Ethereum's status as a performant and scalable world computer that does not compromise on robustness, sustainability and decentralization.

2. Ensures the Ethereum Foundation's own ability to sustain into the long term, and protect Ethereum's core mission and goals, including both the core blockchain layer as well as users' ability to access and use the chain with self-sovereignty, security and privacy.

To this end, my own share of the austerity is that I am personally taking on responsibilities that might in another time have been "special projects" of the EF. Specifically, we are seeking the existence of an open-source, secure and verifiable full stack of software and hardware that can protect both our personal lives and our public environments ( see https://t.co/GzgBS9sh87 ). This includes applications such as finance, communication and governance, blockchains, operating systems, secure hardware, biotech (including both personal and public health), and more. If you have seen the Vensa announcement (seeking to make open silicon a commercially viable reality at least for security-critical applications), the https://t.co/cuyU9Chs1y including recent versions with built in ZK + FHE + differential-privacy features, the air quality work, my donations to encrypted messaging apps, my own enthusiasm and use for privacy-preserving, walkaway-test-friendly and local-first software (including operating systems), then you know the general spirit of what I am planning to support.

For this reason I have just withdrawn 16,384 ETH, which will be deployed toward these goals over the next few years. I am also exploring secure decentralized staking options that will allow even more capital from staking rewards to be put toward these goals in the long term.

Ethereum itself is an indispensable part of the "full-stack openness and verifiability" vision. The Ethereum Foundation will continue with a steadfast focus on developing Ethereum, with that goal in mind. "Ethereum everywhere" is nice, but the primary priority is "Ethereum for people who need it". Not corposlop, but self-sovereignty, and the baseline infrastructure that enables cooperation without domination.

In a world where many people's default mindset is that we need to race to become a big strong bully, because otherwise the existing big strong bullies will eat you first, this is the needed alternative. It will involve much more than technology to succeed, but the technical layer is something which is in our control to make happen. The tools to ensure your, and your community's, autonomy and safety, as a basic right that belongs to everyone. Open not in a bullshit "open means everyone has the right to buy it from us and use our API for $200/month" way, but actually open, and secure and verifiable so that you know that your technology is working for you.

@TrustlessState I'll do the ill-advised thing and try to explain my own thought process and constraints (and possibly unadmitted cowardice) that guide when I do and don't speak on these kinds of political topics - and further down just say what some of my direct opinions are. It's 2026, the

The situation in Iran is continuing to get much worse. Much respect for everyone going through extreme danger to try to increase the chance that Iranian people can be free.

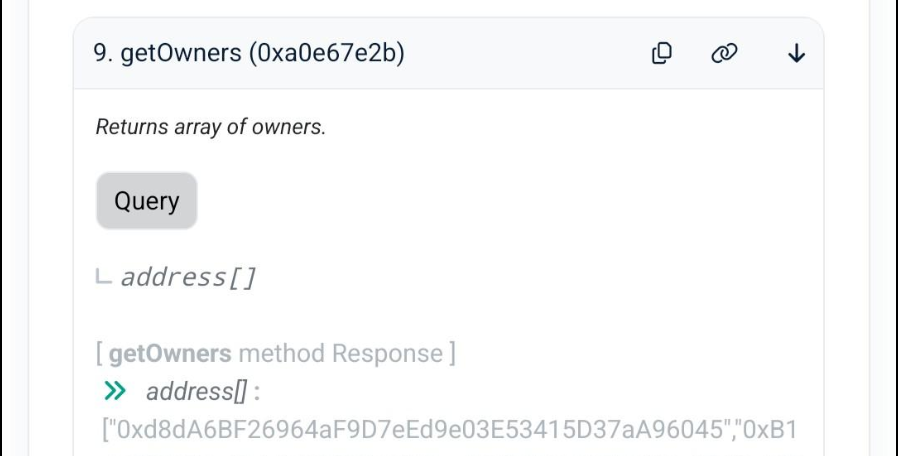

This morning I needed to check which addresses were signers on my multisig.

I was on my phone, and did not have the Safe app installed there.

I realized that I could just look up my address on etherscan, and use the "read contract" feature to get what I want directly.

These are the kinds of additional UX benefits you get if your wallet or application is open source and passes the walkaway test. Giving users access to alternative options often helps in unexpected situations much more mundane than the Safe website "walking away" outright.

(Of course, this exact workflow will eventually have to break because privacy. One way to make an equivalent privacy-friendly workflow is that the user can save a "viewing key" that is an extended version of their address and also contains extra private info, and the block explorers can support it, putting the private info in the hashtag part of the url so it stays client side. Though this has the weakness that encouraging people to paste any kinds of secrets into URLs or webpages is risky; ultimately we just need to be able to do more things through your wallet directly)

The scaling hierarchy in blockchains:

Computation > data > state

Computation is easier to scale than data. You can parallelize it, require the block builder to provide all kinds of "hints" for it, or just replace arbitrary amounts of it with a proof of it.

Data is in the middle. If an availability guarantee on data is required, then that guarantee is required, no way around it. But you _can_ split it up and erasure code it, a la PeerDAS. You can do graceful degradation for it: if a node only has 1/10 the data capacity of the other nodes, it can always produce blocks 1/10 the size.

State is the hardest. To guarantee the ability to verify even one transaction, you need the full state. If you replace the state with a tree and keep the root, you need the full state to be able to update that root. There _are_ ways to split it up, but they involve architecture changes, they are fundamentally not general-purpose.

Hence, if you can replace state with data (without introducing new forms of centralization), by default you should seriously consider it. And if you can replace data with computation (without introducing new forms of centralization), by default you should seriously consider it.

I no longer agree with this previous tweet of mine - since 2017, I have become a much more willing connoisseur of mountains. It's worth explaining why.

https://t.co/LerCobzgvo

First, the original context. That tweet was in a debate with Ian Grigg, who argued that blockchains should track the order of transactions, but not the state (eg. user balances, smart contract code and storage):

> The messages are logged, but the state (e.g., UTXO) is implied, which means it is constructed by the computer internally, and then (can be) thrown away.

I was heavily against this philosophy, because it would imply that users have no way to get the state other than either (i) running a node that processed every transaction in all of history, or (ii) trusting someone else.

In blockchains that commit to the state in the block header (like Ethereum), you can simply prove any value in the state with a Merkle branch. This is conditional on the honest majority assumption: if >= 50% of the consensus participants are honest, then the chain with the most PoW (or PoS) support will be valid, and so the state root will be correct.

Trusting an honest majority is far better than trusting a single RPC provider. Not trusting at all (by personally verifying every transaction in the chain) is theoretically ideal, but it's a computation load infeasible for regular users, unless we take the (even worse) tradeoff of keeping blockchain capacity so low that most people cannot even use the chain.

Now, what has changed since then?

The biggest thing is of course ZK-SNARKs. We now have a technology that lets you verify the correctness of the chain, without literally re-executing every transaction. WE INVENTED THE THING THAT GETS YOU THE BENEFITS WITHOUT THE COSTS! This is like if someone from the future teleported back into US healthcare debates in 2008, and demonstrated a clearly working pill that anyone could make for $15 that cured all diseases. Like, yes, if we have that pill, we should get the government fully out of healthcare, let people make the pill and sell it at Walgreens, and healthcare becomes super affordable so everyone is happy. ZK-SNARKs are literally like that but for the block size war. (With two asterisks for block building centralization and data bandwidth, but that's a separate topic)

With better technology, we should raise our expectations, and revisit tradeoffs that we made grudgingly in a previous era.

But also, I have actually changed my mind on some of the underlying issues. In 2017, I was thinking about blockchains in terms of academic assumptions - what is okay to rely on honest majority for, when we are ok with 1-of-N trust assumption, etc. If a construction gave better properties under known-acceptable assumptions, I would eagerly embrace it.

On a raw subconscious level, I don't think I was sufficiently appreciative of the fact that _in the real world, lots of things break_. Sometimes the p2p network goes down. Sometimes the p2p network has 20x the latency you expected - anyone who has played WoW can attest to long spans of time when the latency spiked up from its usual ~200ms to 1000-5000ms. Sometimes a third party service you've been relying on for years shuts down, and there isn't a good alternative. If the alternative is that you personally go through a github repo and figure out how to PERSONALLY RUN A SERVER, lots of people will give up and never figure it out and end up permanently losing access to their money. Sometimes mining or staking gets concentrated to the point where 51% attacks are very easy to imagine, and you almost have to game-theoretically analyze consensus security as though 75% of miners or stakers are controlled by one single agent. Sometimes, as we saw with tornado cash, intermediaries all start censoring some application, and your *only* option becomes to directly use the chain.

If we are making a self-sovereign blockchain to last through the ages, THE ANSWER TO THE ABOVE CONUNDRUMS CANNOT ALWAYS BE "CALL THE DEVS". If it is, the devs themselves become the point of centralization - they become DEVS in the ancient Roman sense, where the letter V was used to represent the U sound.

The Mountain Man's cabin is not meant as the replacement lifestyle for everyone. It is meant as the safe place to retreat to when things go wrong. It is also meant as the universal BATNA ("Best Alternative to a Negotiated Agreement") - the alternative option that improves your well-being not just in the case when you end up needing it, but also because knowledge of it existing motivates third parties to give you better terms. This is like how Bittorrent existing is an important check on the power of music and video streaming platforms, driving them to offer customers better terms.

We do not need to start living every day in the Mountain Man's cabin. But part of maintaining the infinite garden of Ethereum is certainly keeping the cabin well-maintained.

The relationship between "institutions" and "cypherpunk" is complex and needs to be understood properly. In truth, institutions (both governments and corporations) are neither guaranteed friend nor foe.

Exhibit A: https://t.co/PyTcxu1lkV European Union seeking to aggressively support open source

Exhibit B: https://t.co/RUQvp0nh1B European Union bureaucrats want Chat Control (mandatory encryption backdoors)

Exhibit C: the Patriot Act (which, we must note, _neither party_ now expresses much interest in repealing)

Exhibit D: the US government is now famously a user of Signal

Basically, the game-theoretic optimum for an institution is to have control over what it can control, but also to resist intrusion by others. In fact, institutions are often staffed by highly sophisticated people, who have a much deeper understanding of these issues than regular people and a much deeper will to do something about them. An important driver of many people's refusal to use data-slurping corposlop software is company policy.

Some people have the misperception that my words yesterday about the importance of using tools that maximize your data self-sovereignty are something that will appeal to individual enthusiast communities, but will be rejected as unrealistic by efficiency-minded "serious people". But this is false: "serious people" are often _more_ robustness-minded than retail and many already have policies even stricter than what I advocate.

I predict that in this next era, this trend will accelerate: institutions (again, both corporations and governments) will want to more aggressively minimize their external trust dependencies, and have more guarantees over their operations. Again, this does not mean that they want to minimize *your dependency on them* - that's the thing that we as the Ethereum community must insist on, and build tools to help people achieve. But that's precisely the complexity of the situation.

In the stablecoin world, this means:

* Asset issuers in the EU will want a chain whose governance center of gravity is not overly US-based, and vice versa (same for other pairs of countries)

* Governments will push for more KYC, but at the same time privacy tools will improve, because cypherpunks are working hard to make them improve. The more realistic equilibrium is that non-KYC'd assets will exist, and ability to use them with strong privacy will grow, but also over the next decade we'll see more attempts at "ZK proof of source of funds". We will see ideological disputes over how to respond to this

* Institutions will want to control their own wallets, and even their own staking if they stake ETH. This is actually good for ethereum staking decentralization. Of course, they will not proactively work to give you the user a self-sovereign wallet. Doing _that_ in a way that is secure for regular users is the task of Ethereum cypherpunks (see: smart contract wallets, social recovery).

Ethereum is the censorship-resistant world computer: we do not have to approve of every activity that happens on the world computer. I did not approve much of three million dollar digital monkeys, I will not approve much of privacy with centralized (including multisig/threshold) decryption backdoors. But the existence of those things is not up to me to decide. What *is* up to us is to build the world that we want to see on top of Ethereum, and make that world strong, so that it can prosper in the competition, both on the Ethereum chain itself, and against the centralized world.

At best, we can interoperate with the non-cypherpunk world to better bootstrap the cypherpunk world. For example, spreads on decentralized stablecoins can decrease if it's easy for people to run arbitrage strategies where they hold positive quantities of a centralized stablecoin and negative quantities of the decentralized one. If we want prediction markets to avoid sliding into sports betting corposlop, we should explore improving their liquidity by helping traditional financial entities use them to hedge against their existing risks. What is a bet from one side is often a purchase of insurance from the other side, and if we want prediction markets to evolve in a healthy way, it may be overall better for the counterparties of the sophisticated traders earning big APYs to be buyers of insurance than to be naive bettors who constantly lose money. Synergies like this should be explored across all domains.

This is why I do not believe that cypherpunk requires total hostility to institutions. Instead, I support a policy that institutions are already used to using against each other: openness to win-win cooperation, but aggressively standing up for our own interests. And in this case, our interest is building a financial, social and identity layer that protects people's self-sovereignty and freedom.

近期“嘴撸”产品被封,引发大家对去中心化社交的思考。

@VitalikButerin、@suji_yan、@colinwu 将在今天(1 月 23 日)下午 3 点的推特 space 与中文社区交流。

为什么去中心化社交产品很重要?

为什么一直没有产生 PMF 成功的产品?

未来破局的地方在哪里?

2026 is the year we take back lost ground in computing self-sovereignty.

But this applies far beyond the blockchain world.

In 2025, I made two major changes to the software I use:

* Switched almost fully to https://t.co/ZIKj4U5XFM (open source encrypted decentralized docs)

* Switched decisively to Signal as primary messenger (away from Telegram). Also installed Simplex and Session.

This year changes I've made are:

* Google Maps -> OpenStreetMap https://t.co/Xm0pad5nh9, OrganicMaps https://t.co/yvbwXqEPwo is the best mobile app I've seen for it. Not just open source but also privacy-preserving because local, which is important because it's good to reduce the number of apps/places/people who know anything about your physical location

* Gmail -> Protonmail (though ultimately, the best thing is to use proper encrypted messengers outright)

* Prioritizing decentralized social media (see my previous post)

Also continuing to explore local LLM setups. This is one area that still needs a lot of work in "the last mile": lots of amazing local models, including CPU and even phone-friendly ones, exist, but they're not well-integrated, eg. there isn't a good "google translate equivalent" UI that plugs into local LLMs, transcription / audio input, search over personal docs, comfyui is great but we need photoshop-style UX (I'm sure for each of those items people will link me to various github repos in the replies, but *the whole problem* is that it's "various github repos" and not one-stop-shop). Also I don't want to keep ollama always running because that makes my laptop consume 35 W. So still a way to go, but it's made huge progress - a year ago even most of the local models did not yet exist!

Ideally we push as far as we can with local LLMs, using specialized fine-tuned models to make up for small param count where possible, and then for the heavy-usage stuff we can stack (i) per-query zkp payment, (ii) TEEs, (iii) local query filtering (eg. have a small model automatically remove sensitive details from docs before you push them up to big models), basically combine all the imperfect things to do a best-effort, though ultimately ideally we figure out ultra-efficient FHE.

Sending all your data to third party centralized services is unnecessary. We have the tools to do much less of that. We should continue to build and improve, and much more actively use them.

(btw I really think @SimpleXChat should lowercase the X in their name. An N-dimensional triangle is a much cooler thing to be named after than "simple twitter")

In 2026, I plan to be fully back to decentralized social.

If we want a better society, we need better mass communication tools. We need mass communication tools that surface the best information and arguments and help people find points of agreement. We need mass communication tools that serve the user's long-term interest, not maximize short-term engagement. There is no simple trick that solves these problems. But there is one important place to start: more competition. Decentralization is the way to enable that: a shared data layer, with anyone being able to build their own client on top.

In fact, since the start of the year I've been back to decentralized social already. Every post I've made this year, or read this year, I made or read with https://t.co/BJ3J4TvNNu, a multi-client that covers reading and posting to X, Lens, Farcaster and Bluesky (though bluesky has a 300 char limit, so they don't get to see my beautiful long rants).

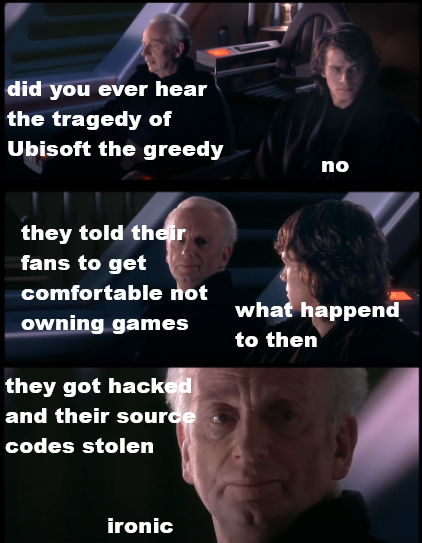

But crypto social projects has often gone the wrong way. Too often, we in crypto think that if you insert a speculative coin into something, that counts as "innovating", and moves the world forward. Mixing money and social is not inherently wrong: Substack shows that it's possible to create an economy that supports very high-quality content. But Substack is about _subscribing to creators_, not _creating price bubbles around them_. Over the past decade, we have seen many many attempts at incentivizing creators by creating price bubbles around them, and all fail by (i) rewarding not content quality, but pre-existing social capital, and (ii) the tokens all going to zero after one or two years anyway.

Too many people make galaxy-brained arguments that creating new markets and new assets is automatically good because it "elicits information", when the rest of their product development actions clearly betray that they're not actually interested in maximizing people's ability to benefit from that information. That is not Hayekian info-utopia, that is corposlop.

Hence, decentralized social should be run by people who deeply believe in the "social" part, and are motivated first and foremost by solving the problems of social.

The Aave team has done a great job stewarding Lens up to this point. I'm excited about what will happen to Lens over the next year, because I think the new team coming in are people who actually are interested in the "social": even back when the decentralized social space barely existed, they were trying to figure out how to do encrypted tweets.

I plan to post more there this year.

I encourage everyone to spend more time in Lens, Farcaster and the broader decentralized social world this year. We need to move beyond everyone constantly tweeting inside a single global info warzone, and into a reopened frontier, where new and better forms of interaction become possible.

@donnoh_eth I'm definitely more in favor of native rollups than before.

Before a big reason why I was against, is that a native rollup precompile must either be used in "zk mode" or in "optimistic mode", and ZK-EVMs were too immature for ZK mode, and so if we give L2s the choices "have 2-7

We need more DAOs - but different and better DAOs.

The original drive to build Ethereum was heavily inspired by decentralized autonomous organizations: systems of code and rules that lived on decentralized networks that could manage resources and direct activity, more efficiently and more robustly than traditional governments and corporations could.

Since then, the concept of DAOs has migrated to essentially referring to a treasury controlled by token holder voting - a design which "works", hence why it got copied so much, but a design which is inefficient, vulnerable to capture, and fails utterly at the goal of mitigating the weaknesses of human politics. As a result, many have become cynical about DAOs.

But we need DAOs.

* We need DAOs to create better oracles. Today, decentralized stablecoins, prediction markets, and other basic building blocks of defi are built on oracle designs that we are not satisfied with. If the oracle is token based, whales can manipulate the answer on a subjective issue and it becomes difficult to counteract them. Fundamentally, a token-based oracle cannot have a cost of attack higher than its market cap, which in turn means it cannot secure assets without extracting rent higher than the discount rate. And if the oracle uses human curation, then it's not very decentralized. The problem here is not greed. The problem is that we have bad oracle designs, we need better ones, and bootstrapping them is not just a technical problem but also a social problem.

* We need DAOs for onchain dispute resolution, a necessary component of many types of more advanced smart contract use cases (eg. insurance). This is the same type of problem as price oracles, but even more subjective, and so even harder to get right.

* We need DAOs to maintain lists. This includes: lists of applications known to be secure or not scams, lists of canonical interfaces, lists of token contract addresses, and much more.

* We need DAOs to get projects off the ground quickly. If you have a group of people, who all want something done and are willing to contribute some funds (perhaps in exchange for benefits), then how do you manage this, especially if the task is too short-duration for legal entities to be worth it?

* We need DAOs to do long-term project maintenance. If the original team of a project disappears, how can a community keep going, and how can new people coming in get the funding they need?

One framework that I use to analyze this is "convex vs concave" from https://t.co/1BrMsUAKWK . If the DAO is solving a concave problem, then it is in an environment where, if faced with two possible courses of action, a compromise is better than a coin flip. Hence, you want systems that maximize robustness by averaging (or rather, medianing) in input from many sources, and protect against capture and financial attacks. If the DAO is solving a convex problem, then you want the ability to make decisive choices and follow through on them. In this case, leaders can be good, and the job of the decentralized process should be to keep the leaders in check.

For all of this to work, we need to solve two problems: privacy, and decision fatigue. Without privacy, governance becomes a social game (see https://t.co/uMXcuzQNjM ). And if people have to make decisions every week, for the first month you see excited participation, but over time willingness to participate, and even to stay informed, declines.

I see modern technology as opening the door to a renaissance here. Specifically:

* ZK (and in some cases MPC/FHE, though these should be used only when ZK along cannot solve the problem) for privacy

* AI to solve decision fatigue

* Consensus-finding communication tools (like https://t.co/Nzord32Ub1, but going further)

AI must be used carefully: we must *not* put full-size deepseek (or worse, GPT 5.2) in charge of a DAO and call it a day. Rather, AI must be put in thoughtfully, as something that scales and enhances human intention and judgement, rather than replacing it. This could be done at DAO level (eg. see how https://t.co/pCHI7Mlo2m works), or at individual level (user-controlled local LLMs that vote on their behalf).

It is important to think about the "DAO stack" as also including the communication layer, hence the need for forums and platforms specially designed for the purpose. A multisig plus well-designed consensus-finding tools can easily beat idealized collusion-resistant quadratic funding plus crypto twitter.

But in all cases, we need new designs. Projects that need new oracles and want to build their own should see that as 50% of their job, not 10%.

Projects working on new governance designs should build with ZK and AI in mind, and they should treat the communication layer as 50% of their job, not 10%.

This is how we can ensure the decentralization and robustness of the Ethereum base layer also applies to the world that gets built on top.

An important, and perenially underrated, aspect of "trustlessness", "passing the walkaway test" and "self-sovereignty" is protocol simplicity.

Even if a protocol is super decentralized with hundreds of thousands of nodes, and it has 49% byzantine fault tolerance, and nodes fully verify everything with quantum-safe peerdas and starks, if the protocol is an unwieldy mess of hundreds of thousands of lines of code and five forms of PhD-level cryptography, ultimately that protocol fails all three tests:

* It's not trustless because you have to trust a small class of high priests who tell you what properties the protocol has

* It doesn't pass the walkaway test because if existing client teams go away, it's extremely hard for new teams to get up to the same level of quality

* It's not self-sovereign because if even the most technical people can't inspect and understand the thing, it's not fully yours

It's also less secure, because each part of the protocol, especially if it can interact with other parts in complicated ways, carries a risk of the protocol breaking.

One of my fears with Ethereum protocol development is that we can be too eager to add new features to meet highly specific needs, even if those features bloat the protocol or add entire new types of interacting components or complicated cryptography as critical dependencies. This can be nice for short-term functionality gains, but it is highly destructive to preserving long-term self-sovereignty, and creating a hundred-year decentralized hyperstructure that transcends the rise and fall of empires and ideologies.

The core problem is that if protocol changes are judged from the perspective of "how big are they as changes to the existing protocol", then the desire to preserve backwards compatibility means that additions happen much more often than subtractions, and the protocol inevitably bloats over time. To counteract this, the Ethereum development process needs an explicit "simplification" / "garbage collection" function.

"Simplification" has three metrics:

* Minimizing total lines of code in the protocol. An ideal protocol fits onto a single page - or at least a few pages

* Avoiding unnecessary dependencies on fundamentally complex technical components. For example, a protocol whose security solely depends on hashes (even better: on exactly one hash function) is better than one that depends on hashes and lattices. Throwing in isogenies is worst of all, because (sorry to the truly brilliant hardworking nerds who figured that stuff out) nobody understands isogenies.

* Adding more _invariants_: core properties that the protocol can rely on, for example EIP-6780 (selfdestruct removal) added the property that at most N storage slots can be changedakem per slot, significantly simplifying client development, and EIP-7825 (per-tx gas cap) added a maximum on the cost of processing one transaction, which greatly helps ZK-EVMs and parallel execution.

Garbage collection can be piecemeal, or it can be large-scale. The piecemeal approach tries to take existing features, and streamline them so that they are simpler and make more sense. One example is the gas cost reforms in Glamsterdam, which make many gas costs that were previously arbitrary, instead depend on a small number of parameters that are clearly tied to resource consumption.

One large-scale garbage collection was replacing PoW with PoS. Another is likely to happen as part of Lean consensus, opening the room to fix a large number of mistakes at the same time ( https://t.co/UnD191Yiza ).

Another approach is "Rosetta-style backwards compatibility", where features that are complex but little-used remain usable but are "demoted" from being part of the mandatory protocol and instead become smart contract code, so new client developers do not need to bother with them. Examples:

* After we upgrade to full native account abstraction, all old tx types can be retired, and EOAs can be converted into smart contract wallets whose code can process all of those transaction types

* We can replace existing precompiles (except those that are _really_ needed) with EVM or later RISC-V code

* We can eventually change the VM from EVM to RISC-V (or other simpler VM); EVM could be turned into a smart contract in the new VM.

Finally, we want to move away from client developers feeling the need to handle all older versions of the Ethereum protocol. That can be left to older client versions running in docker containers.

In the long term, I hope that the rate of change to Ethereum can be slower. I think for various reasons that ultimately that _must_ happen. These first fifteen years should in part be viewed as an adolescence stage where we explored a lot of ideas and saw what works and what is useful and what is not. We should strive to avoid the parts that are not useful being a permanent drag on the Ethereum protocol.

Basically, we want to improve Ethereum in a way that looks like this:

2026 is the year that we take back lost ground in terms of self-sovereignty and trustlessness.

Some of what this practically means:

Full nodes: thanks to ZK-EVM and BAL, it will once again become easier to locally run a node and verify the Ethereum chain on your own computer.

Helios: actually verify the data you're receiving from RPCs instead of blindly trusting it.

ORAM, PIR: ask for data from RPCs without revealing which data you're asking, so you can access dapps without your access patterns being sold off to dozens of third parties all around the world.

Social recovery wallets and timelocks: wallets that don't make you lose all your money if you misplace your seedphrase, or if an online or offline attacker extracts your seedphrase, and *also* don't make all your money backdoored by Google.

Privacy UX: make private payments from your wallet, with the same user experience as making public payments.

Privacy censorship resistance: private payments with the ERC-4337 mempool, and soon native AA + FOCIL, without relying on the public broadcaster ecosystem.

Application UIs: use more dapps from an onchain UI with IPFS, without relying on trusted servers that would lock you our of practical recovery of your assets if they went offline, and would give you a hijacked UI that steals your funds if they get hacked for even a millisecond.

In many of these areas, over the last ten years we have seen serious backsliding in Ethereum. Nodes went from easy to run to hard to run. Dapps went from static pages to complicated behemoths that leak all your data to a dozen servers. Wallets went from routing everything through the RPC, which could be any node of your choice including on your own computer, to leaking your data to a dozen servers of their choice. Block building became more centralized, putting Ethereum transaction inclusion guarantees under the whims of a very small number of builders.

In 2026, no longer. Every compromise of values that Ethereum has made up to this point - every moment where you might have been thinking, is it really worth diluting ourselves so much in the name of mainstream adoption - we are making that compromise no longer.

It will be a long road. We will not get everything we want in the next Kohaku release, or the next hard fork, or the hard fork after that. But it will make Ethereum into an ecosystem that deserves not only its current place in the universe, but a much greater one.

In the world computer, there is no centralized overlord.

There is no single point of failure.

There is only love.

Milady.

As an advisor to the @ethereumfndn

To our community in Iran, our eyes and thoughts are with you and the entire Iranian population. We see you bravely fighting tyranny and being brutally silenced, all your rights scraped, and communications cut

Many of you travelled from Iran to

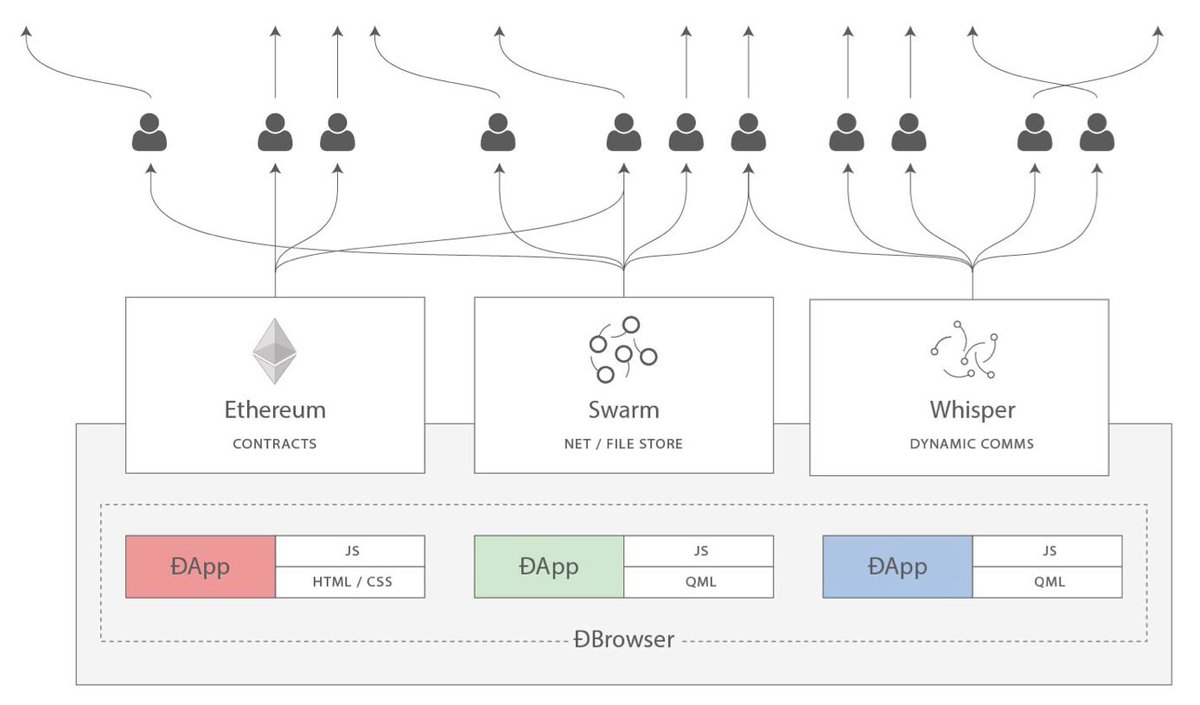

In 2014, there was a vision: you can have permissionless, decentralized applications that could support finance, social media, ride sharing, governing organizations, crowdfunding, potentially create an entire alternative web, all on the backs of a suite of technologies.

Ethereum: the blockchain. The world computer that could give any application its shared memory.

Whisper: the data layer. Messages too expensive for a blockchain, that do no need consensus.

Swarm: the storage layer. Store files for long-term access.

Over the last five years, this core vision has at times become obscured, with various "metas" and "narratives" at various times taking center stage. But the core vision has never died. And in fact, the core technologies behind it are only growing stronger.

Ethereum is now proof of stake. Ethereum is now scaling, it is now cheap, and it is on track to get more scalable and cheaper thanks to the power of ZK-EVMs. Thanks to ZK-EVM + PeerDAS, the "sharding" vision is effectively being realized. And L2s can give additional and different kinds of gains in speed on top.

Whisper is now Waku ( https://t.co/uj5h9iSpIL ), and already powers many applications (eg. https://t.co/owlo5yoS68, https://t.co/hDizYCFjuq just to name two I use). Even outside of Waku, the quality of decentralized messaging has increased. Fileverse (decentralized Google Docs and Sheets alternative: https://t.co/ZIKj4U5pQe ) has seen massive gains in usability over the past year.

IPFS is now highly performant and robust as a decentralized way of retrieving files, though IPFS alone does not solve the storage problem. Hence, there is still room to improve there.

All of the prerequisites for the original web3 vision are here, in full force, and are continuing to get stronger over the next few years. Hence, it's time to buidl, and buidl decentralized.

Fileverse is an excellent example of the right way to do things:

* It uses Ethereum and Gnosis Chain for what they are good for: names, accounts and permissioning, document registration

* It uses decentralized messaging and file storage to store documents and propagate changes to documents

* The application passes the walkaway test: https://t.co/xO1dNLlnhf (even if Fileverse disappears, you can still retrieve them and even keep editing them with the open source UI)

This is what we mean by "build a hammer that is a tool you buy once and it's yours, not a corposlop AI dishwasher that requires you to register for a google account and charges a subscription fee per month for extra washing modes, and probably spies on you and stops working if you get politically disfavored by a foreign country".

If you think this criticism of corposlop is hyperbolic, well turns out, it's literally a concatenation of these three:

* https://t.co/GBNaXOa454

* https://t.co/saD3cNp4Ae

* https://t.co/skoJ58vbqz

In 2014, decentralized applications were toys, hundreds of times more difficult to use in web2. In 2026, fileverse is now usable enough that I regularly write documents in it and send them to other people to collaborate. The decentralized renaissance is coming, and you can be part of making it happen.

Ethereum itself must pass the walkaway test.

Ethereum is meant to be a home for trustless and trust-minimized applications, whether in finance, governance or elsewhere. It must support applications that are more like tools - the hammer that once you buy it's yours - than like services that lose all functionality once the vendor loses interest in maintaining them (or worse, gets hacked or becomes value-extractive). Even when applications do have functionality that depends on a vendor, Ethereum can help reduce those dependencies as much as possible, and protect the user as much as possible in those cases where the dependencies fail.

But building such applications is not possible on a base layer which itself depends on ongoing updates from a vendor in order to continue being usable - even if that "vendor" is the all core devs process. Ethereum the blockchain must have the traits that we strive for in Ethereum's applications. Hence, Ethereum itself must pass the walkaway test.

This means that Ethereum must get to a place where we _can ossify if we want to_. We do not have to stop making changes to the protocol, but we must get to a place where Ethereum's value proposition does not strictly depend on any features that are not in the protocol already.

This includes the following:

* Full quantum-resistance. We should resist the trap of saying "let's delay quantum-resistance until the last possible moment in the name of ekeing out more efficiencies for a while longer". Individual users have that right, but the protocol should not. Being able to say "Ethereum's protocol, as it stands today, is cryptographically safe for a hundred years" is something we should strive to get to as soon as possible, and insist on as a point of pride.

* An architecture that can expand to sufficient scalability. The protocol needs to have the properties that allow it to expand to many thousands of TPS over time, most notably ZK-EVM validation and data sampling through PeerDAS. Ideally, we get to a point where further scaling is done through "parameter only" changes - and ideally _those_ changes are not BPO-style forks, but rather are made with the same validator voting mechanism we use for the gas limit.

* A state architecture that can last decades. This means deciding, and implementing, whatever form of partial statelessness and state expiry will let us feel comfortable letting Ethereum run with thousands of TPS for decades, without breaking sync or hard disk or I/O requirements. It also means future-proofing the tree and storage types to work well with this long-term environment.

* An account model that is general-purpose (this is "full account abstraction": move away from enshrined ECDSA for signature validation)

* A gas schedule that we are confident is free of DoS vulnerabilities, both for execution and for ZK-proving

* A PoS economic model that, with all we have learned over the past half decade of proof of stake in Ethereum and full decade beyond, we are confident can last and remain decentralized for decades, and supports the usefulness of ETH as trustless collateral (eg. in governance-minimized ETH-backed stablecoins)

* A block building model that we are confident will resist centralization pressure and guarantee censorship resistance even in unknown future environments

Ideally, we do the hard work over the next few years, to get to a point where in the future almost all future innovation can happen through client optimization, and get reflected in the protocol through parameter changes. Every year, we should tick off at least one of these boxes, and ideally multiple. Do the right thing once, based on knowledge of what is truly the right thing (and not compromise halfway fixes), and maximize Ethereum's technological and social robustness for the long term.

Ethereum goes hard.

This is the gwei.

If done properly, this is a very good move.

I hope it can be verifiable and replicable (probably means publishing all tweets and anonymized likes with 4 week delay).

This would not solve all problems, but it would very effectively address concerns about algorithmic transparency that I and many other members of the public have been raising.

I actually think 4 weeks may be over-ambitious, because it means the algo would need to change that often to stay ahead of people gaming it; hence why I proposed 1 year in my recent post advocating algorithmic transparency. But this does really need to be verifiable and replicable though, so that someone who thinks they've been shadowbanned, deboosted, etc would be able to walk through the code executing the algorithm and see why their posts are not being seen by people.

https://t.co/Hlzhn78Bot

I agree with maybe 60% of this, but one bit that is particularly important to highlight is the explicit separation between what the poster calls "the open web" (really, the corposlop web), and "the sovereign web".

https://t.co/w7Vtbn7uFt

This is a distinction I did not realize until recently, and I must admit the bitcoin maximalists were far ahead: a big part of their resistance to ICOs, tokens other than bitcoin, arbitrary financial applications, etc was precisely about keeping bitcoin "sovereign" and not "corposlop". The big error that many of them made was trying to achieve this goal with either government crackdowns or user disempowerment (keeping bitcoin script limited, and rejecting many categories of applications entirely), but their fear was real.

So what is corposlop? In essence, it is the combination of three things:

* Corporate optimization power

* An aura of respectableness of being a company with sleek polished branding

* Behavior that the exact opposite of respectable, because that's what's needed to maximize profit

Corposlop includes things like:

* Social media that maximizes dopamine, outrage, other methods of short-term engagement, at the expense of long-term value and fulfillment

* Needless mass data collection from users, often followed by managing it carelessly or even casually selling it to third parties

* Walled gardens charging monopolistic high fees and actively preventing people from even linking to other platforms

* Hollywood releasing the 7th sequel to some tired franchise, because that's the most risk-averse thing to do

* Every corporation that rallied around slogans of diversity and equity and the need to overturn society to fight racism in 2020, and then publicly mocked those causes for engagement in 2025

This is all digital corposlop; there are big and important analogues to this in the physical world too.

Corposlop is soulless: trend-following homogeneity that is both evil and lame https://t.co/FZwqwujAGb

These are things that appear to serve the user, but actually disempower the user.

I have many qualms with Apple, but aside from their monopolistic practices, they actually have many non-corposlop traits. They serve users not by constantly asking "what do users want this quarter", but by having an opinionated long-term vision. They have a strong emphasis on privacy. They resist and create trends rather than following them. I just wish they could take the brave step of ending their monopolistic practices and switch to an open source first strategy. It may damage their market cap, but man must live for something higher than market caps.

Zac from Aztec was also early to recognize the importance of this, with a post that is on the whole very pro-freedom, but at the same time does not shrink back from labeling what is essentially corposlop a primary enemy, even when it does not violate the libertarian non-aggression principle.

https://t.co/PsbySLhLWU

In 2000, the understanding of "sovereignty" largely focused on avoiding the iron fist of government. Today, "sovereignty" also means securing your digital privacy through cryptography, and securing your own mind from corporate mind warfare trying to extract your attention and your dollars. It also means doing things because you believe in them, and declaring independence from the homogenizing and soul-sucking concept of "the meta".

These are the kinds of tools that we should build more of. Build tools like:

* Privacy-preserving local-first applications that minimize dependence on and data leaks to third parties

* Social media platforms and tools that let the user take control of what content they see. Appeal to people's long-term goals, not short-term impulses

* Financial tools that help users grow their wealth, and do not encourage 50x leverage or sports betting or taking out a loan to pay for a burrito

* AI tools that are maximally open and privacy and local-friendly, and that maximize productivity from merging the power of human and bot, rather than encouraging the user to sit back and let the bot do all the work, so they learn nothing

* Applications, companies, and physical environments that take an opinionated view on the kind of world they want to see, and have an opinionated culture

* DAOs that can support organizations and communities that steadfastly pursue a unique objective, and do not all get captured by the same groups. Privacy-preserving and non-tokenholder-driven voting can help here

Be sovereign. Reject corposlop. Believe in somETHing.

Increasing bandwidth is safer than reducing latency

With PeerDAS and ZKPs, we know how to scale, and potentially we can scale thousands of times compared to the status quo. The numbers become far more favorable than before (eg. see analysis here, pre and post-sharding https://t.co/2gR8V6hxJe ). There is no law of physics that prevents combining extreme scale with decentralization.

Reducing latency is not like this. We are fundamentally constrained by speed of light, and on top of that we are also constrained by:

* Need to support nodes (especially attesters) in rural environments, worldwide, and in home or commercial environments outside of data centers.

* Need to support censorship-resistance and anonymity for nodes (especially proposers and attesters).

* The fact that running a node in a non-super-concentrated location must be not only possible, but also economically viable. If staking outside NYC drops your revenues by 10%, over time more and more people will stake in NYC.

Ethereum itself must pass the walkaway test, and so we cannot build a blockchain that depends on constant social re-juggling to ensure decentralization. Economics cannot handle the entire load, but it must handle most.

Now, we can decrease latency quite a bit from the present-day situation without making tradeoffs. In particular:

* P2P improvements (esp erasure coding) can decrease message propagation times without requiring individual nodes to have lower bandwidth

* An available chain with a smaller node count per slot (eg. 512 instead of 30,000) can remove the need for an aggregation step, allowing the entire hot path to happen in one subnet

This plausibly buys us 3-6x. Hence, I think moderate latency decreases, to a 2-4s level, are very much in the realm of possibility.

But Ethereum is NOT the world video game server, it is the world heartbeat.

If you need to build applications that are faster than the heartbeat, they will need to have offchain components. This is a big part of why L2s will continue to have a role even in a greatly scaled Ethereum (there are other reasons too, around VM customization, and around applications that need _even more scale_).

Ultimately, AI will necessitate applications that go faster than the heartbeat no matter what we do. If an AI can think 1000x faster than humans, then to the AI, the "subjective speed of light" is only 300 km/s. Hence, it can talk near-instantly within the scope of a city, but not further. As a result, there will inevitably be AI-focused applications that will need "city chains", potentially even chains localized to a single building. These will have to be L2s.

And on the flipside, it would be too much of a cost to make it viable to run a staking node on Mars. Even Bitcoin does not strive for this. Ultimately, Ethereum belongs to Terra, and its L2s will serve both hyper-localized needs in its cities, and hyper-scaled needs planet-wide, and users on other worlds.

Milady.

One metaphor for Ethereum is BitTorrent, and how that p2p network combines decentralization and mass scale. Ethereum's goal is to do the same thing but with consensus.

Another metaphor for Ethereum is Linux.

* Linux is free and open source software, and does not compromise on this

* Linux is quietly depended on by billions of people and enterprises worldwide. Governments regularly use it.

* There are many operating systems based on Linux that pursue mass adoption

* There are Linux distributions (eg. Arch) that are highly purist, minimalistic and technologically beautiful, and focus on making the user feel powerful, not comfortable

(Actually, BitTorrent is depended on by enterprises too: many businesses and even governments (!!) use it to distribute large files to their users https://t.co/DS3K6aF1KN )

We must make sure that Ethereum L1 works as the financial (and ultimately identity, social, governance...) home for individuals and organizations who want the higher level of autonomy, and give them access to the full power of the network without dependence on intermediaries. At the same time, what Linux shows is that this is fully compatible with providing value to very large numbers of people, and even being loved and trusted by enterprises worldwide. Many enterprises in fact desperately want to build on an open and resilient ecosystem - what we call trustlessness, they call prudent counterparty risk minimization.

This is the gwei.

“Ethereum was not created to make finance efficient or apps convenient. It was created to set people free”

This was an important - and controversial - line from the Trustless Manifesto ( https://t.co/QAvZfiNxpe ), and it is worth revisiting it and better understanding what it means.

“efficient” and “convenient” have the connotation of improving the average case, in situations where it’s already pretty good. Efficiency is about telling the world's best engineers to put their souls into reducing latency from 473 ms to 368ms, or increasing yields from 4.5% APY to 5.3% APY. Convenience is about people making one click instead of three, and reducing signup times from 1 min to 20 sec.

These things can be good to do. But we must do them under the understanding that we will never be as good at this game as the Silicon Valley corporate players. And so the primary underlying game that Ethereum plays must be a different game. What is the game? Resilience.

Resilience is the game where it’s not about 4.5% APY vs 5.3% APY - rather, it’s about minimizing the chance that you get -100% APY.

Resilience is the game where if you become politically unpopular and get deplatformed, or if a the developers of your application go bankrupt or disappear, or if Cloudflare goes down, or if an internet cyberwar breaks out, your 2000ms latency continues to be 2000ms.

Resilience is the game where anyone, anywhere in the world will be able to access the network and be a first-class participant.

Resilience is sovereignty. Not sovereignty in the sense of lobbying to become a UN member state and shaking hands at Davos in two weeks, but sovereignty in the sense that people talk about "digital sovereignty" or "food sovereignty" - aggressively reducing your vulnerabilities to external dependencies that can be taken away from you on a whim. This is the sense in which the world computer can be sovereign, and in doing so make its users also sovereign.

This baseline is what enables interdependence as equals, and not as vassals of corporate overlords thousands of kilometers away.

This is the game that Ethereum is suited to win, and it delivers a type of value that, in our increasingly unstable world, a lot of people are going to need.

The fundamental DNA of web2 consumer tech is not suited to resilience. The fundamental DNA of _finance_ often spends considerable effort on resilience, but it is a very partial form of resilience, good at solving for some types of risks but not others.

Blockspace is abundant. Decentralized, permissionless and resilient blockspace is not. Ethereum must first and foremost be decentralized, permissionless and resilient block space - and then make that abundant.

Now that ZKEVMs are at alpha stage (production-quality performance, remaining work is safety) and PeerDAS is live on mainnet, it's time to talk more about what this combination means for Ethereum.

These are not minor improvements; they are shifting Ethereum into being a fundamentally new and more powerful kind of decentralized network.

To see why, let's look at the two major types of p2p network so far:

BitTorrent (2000): huge total bandwidth, highly decentralized, no consensus

Bitcoin (2009): highly decentralized, consensus, but low bandwidth - because it’s not “distributed” in the sense of work being split up, it’s *replicated*

Now, Ethereum with PeerDAS (2025) and ZK-EVMs (expect small portions of the network using it in 2026), we get: decentralized, consensus and high bandwidth

The trilemma has been solved - not on paper, but with live running code, of which one half (data availability sampling) is *on mainnet today*, and the other half (ZK-EVMs) is *production-quality on performance today* - safety is what remains.

This was a 10-year journey (see the first commit of my original post on DAS here: https://t.co/Fa0jKFgObW , and ZK-EVM attempts started in ~2020), but it's finally here.

Over the next ~4 years, expect to see the full extent of this vision roll out:

* In 2026, large non-ZKEVM-dependent gas limit increases due to BALs and ePBS, and we'll see the first opportunities to run a ZKEVM node

* In 2026-28, gas repricings, changes to state structure, exec payload going into blobs, and other adjustments to make higher gas limits safe

* In 2027-30, large further gas limit increases, as ZKEVM becomes the primary way to validate blocks on the network

A third piece of this is distributed block building.

A long-term ideal holy grail is to get to a future where the full block is *never* constituted in one single place. This will not be necessary for a long time, but IMO it is worth striving for us at least have the capability to do that.

Even before that point, we want the meaningful authority in block building to be as distributed as possible. This can be done either in-protocol (eg. maybe we figure out how to expand FOCIL to make it a primary channel for txs), or out-of-protocol with distributed builder marketplaces. This reduces risk of centralized interference with real-time transaction inclusion, AND it creates a better environment for geographical fairness.

Onward.

Welcome to 2026! Milady is back.

Ethereum did a lot in 2025: gas limits increased, blob count increased, node software quality improved, zkEVMs blasted through their performance milestones, and with zkEVMs and PeerDAS ethereum made its largest step toward being a fundamentally new and more powerful kind of blockchain (more on this later)

But we have a challenge: Ethereum needs to do more to meet its own stated goals. Not the quest of "winning the next meta" regardless of whether it's tokenized dollars or political memecoins, not arbitrarily convincing people to help us fill up blockspace to make ETH ultrasound again, but the mission:

To build the world computer that serves as a central infrastructure piece of a more free and open internet.

We're building decentralized applications. Applications that run without fraud, censorship or third-party interference. Applications that pass the walkaway test: they keep running even if the original developers disappear. Applications where if you're a user, you don't even notice if Cloudflare goes down - or even if all of Cloudflare gets hacked by North Korea. Applications whose stability transcends the rise and fall of companies, ideologies and political parties. And applications that protect your privacy. All this - for finance, and also for identity, governance and whatever other civilizational infrastructure people want to build.

These properties sound radical, but we must remember that a generation ago any wallet, kitchen appliance, book or car would fulfill every single one of them. Today, all of the above are by default becoming subscription services, consigning you to permanent dependence on some centralized overlord.

Ethereum is the rebellion against this.

To achieve this, it needs to be (i) usable, and usable at scale, and (ii) actually decentralized. This needs to happen at both (a) the blockchain layer, including the software we use to run and talk to the blockchain, and (b) the application layer. All of these pieces must be improved - they are already being improved, but they must be improved more.

Fortunately, we have powerful tools on our side - but we need to apply them, and we will.

Wishing everyone an exciting 2026.

Milady.

One of the reasons why I'm enthusiastic about @devanshmehta deep funding work is that I think it's a big tragedy we've pushed so much into cloud-based software and not done enough local-first, including in gaming, and it's clear that "it's easy to pirate local-first but you can't pirate the cloud" is a major reason why.

If we had back in 2000 figured out some regime where sales tax on electronic devices gets routed into automated funding for open source software in proportion to how much people benefit and use it, I wonder if that whole tragedy could have been avoided.

We need a good trustless onchain gas futures market.

(Like, a prediction market on the BASEFEE)

I've heard people ask: "today fees are low, but what about in 2 years? You say they'll stay low because of increasing gaslimit from BAL + ePBS + later ZK-EVM, but do I believe you?"

An onchain gas futures market would help solve this: people would get a clear signal of people's expectations of future gas fees, and would even be able to hedge against future gas prices, effectively prepaying for any specific quantity of gas in a specific time interval.

We need a good trustless onchain gas futures market.

(Like, a prediction market on the BASEFEE)

I've heard people ask: "today fees are low, but what about in 2 years? You say they'll stay low because of increasing gaslimit from BAL + ePBS + later ZK-EVM, but do I believe you?"

An onchain gas futures market would help solve this: people would get a clear signal of people's expectations of future gas fees, and would even be able to hedge against future gas prices, effectively prepaying for any specific quantity of gas in a specific time interval.

Looks like there is at least one project attempting this: https://t.co/fN9gYfcABJ

Would be good to see this space mature more.

The fact that we have political systems that do byzantine things like this instead of just charging a basic tax per litre of fuel to account for environmental costs (or even mileage * weight^4 for wear and tear) in a consistent way continues to frustrate me.

When accounting for externalities, policy should "tell" consumers/market what to optimize for (and how much it matters), not how to optimize for it. The latter is more intrusive, and easier to game.

NEW POD @vitalikbuterin on the @greenpillnet podcast for a year-end deep dive into public goods funding in the @Ethereum ecosystem.

Thx @devanshmehta for co-hosting. We discuss how the landscape has shifted from “vibes-based” funding to verifiable, dependency-driven

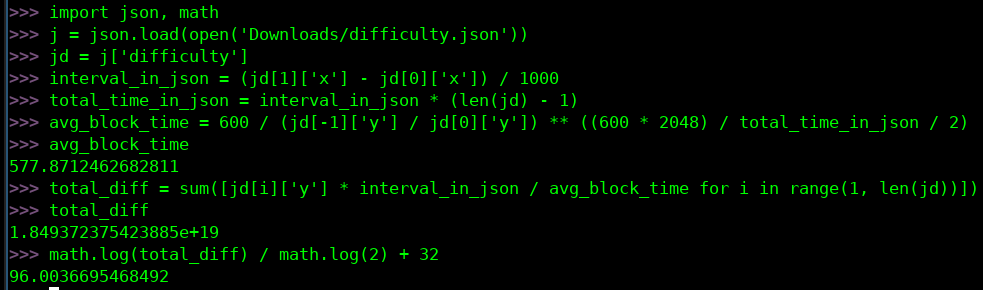

My rough math based on average difficulty stats suggests that Bitcoin mining crossed the total 2**96 hashes milestone very recently?

Seems like a good reason to insist on (close to) 128 bit security (ie. @drakefjustin was right)