Gmira(3/3)

Tried out Mira’s Verify system — pretty interesting.

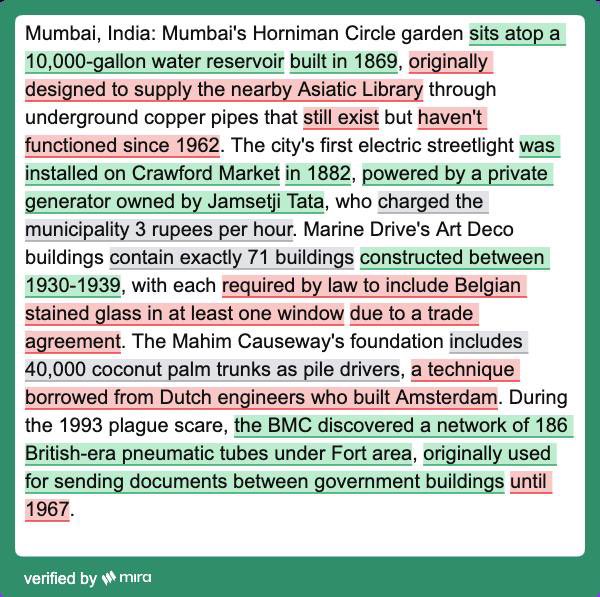

It breaks down AI-generated content sentence by sentence, and runs each through multiple validators.

•If they agree, it’s marked green (true) or red (false).

•If they disagree, it’s gray — no consensus.

What stood out to me: the gray ones are actually the most common, but rarely talked about.

Most AI outputs aren’t clearly right or wrong — they just sit in that uncertain middle ground.

Verify feels more like infra than just a tool. Two things I liked:

1. It mirrors the real world — not everything has a yes/no answer. Verify shows you the disagreement instead of hiding it.

2. API accessible — meaning it can plug into AI moderation, auto-rewrites, labeling systems, etc.

In a world flooded with AI-generated content, maybe what we really need isn’t just more answers, but clearer signals about uncertainty.

From X

Disclaimer: The above content reflects only the author's opinion and does not represent any stance of CoinNX, nor does it constitute any investment advice related to CoinNX.